Expected value

Expected value

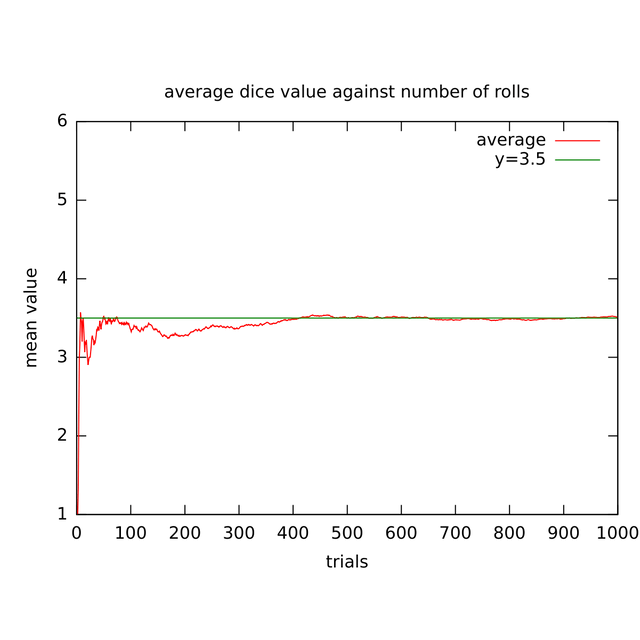

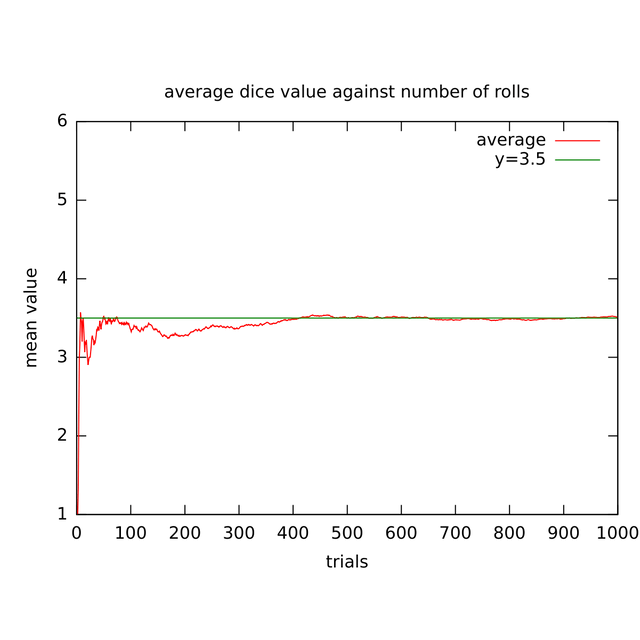

In probability theory, the expected value of a random variable, intuitively, is the long-run average value of repetitions of the same experiment it represents. For example, the expected value in rolling a six-sided die is 3.5, because the average of all the numbers that come up is 3.5 as the number of rolls approaches infinity (see § Examples for details). In other words, the law of large numbers states that the arithmetic mean of the values almost surely converges to the expected value as the number of repetitions approaches infinity. The expected value is also known as the expectation, mathematical expectation, EV, average, mean value, mean, or first moment.

More practically, the expected value of a discrete random variable is the probability-weighted average of all possible values. In other words, each possible value the random variable can assume is multiplied by its probability of occurring, and the resulting products are summed to produce the expected value. The same principle applies to an absolutely continuous random variable, except that an integral of the variable with respect to its probability density replaces the sum. The formal definition subsumes both of these and also works for distributions which are neither discrete nor absolutely continuous; the expected value of a random variable is the integral of the random variable with respect to its probability measure.[1][2]

The expected value does not exist for random variables having some distributions with large "tails", such as the Cauchy distribution.[3] For random variables such as these, the long-tails of the distribution prevent the sum or integral from converging.

The expected value is a key aspect of how one characterizes a probability distribution; it is one type of location parameter. By contrast, the variance is a measure of dispersion of the possible values of the random variable around the expected value. The variance itself is defined in terms of two expectations: it is the expected value of the squared deviation of the variable's value from the variable's expected value (var(X) = E[(X – E[X])2] = E(X2) – [E(X)]2).

The expected value plays important roles in a variety of contexts. In regression analysis, one desires a formula in terms of observed data that will give a "good" estimate of the parameter giving the effect of some explanatory variable upon a dependent variable. The formula will give different estimates using different samples of data, so the estimate it gives is itself a random variable. A formula is typically considered good in this context if it is an unbiased estimator—that is if the expected value of the estimate (the average value it would give over an arbitrarily large number of separate samples) can be shown to equal the true value of the desired parameter.

In decision theory, and in particular in choice under uncertainty, an agent is described as making an optimal choice in the context of incomplete information. For risk neutral agents, the choice involves using the expected values of uncertain quantities, while for risk averse agents it involves maximizing the expected value of some objective function such as a von Neumann–Morgenstern utility function. One example of using expected value in reaching optimal decisions is the Gordon–Loeb model of information security investment. According to the model, one can conclude that the amount a firm spends to protect information should generally be only a small fraction of the expected loss (i.e., the expected value of the loss resulting from a cyber or information security breach).[4]

Definition

Finite case

be a random variable with a finite number of finite outcomes

be a random variable with a finite number of finite outcomes occurring with probabilities

occurring with probabilities respectively. The expectation of

respectively. The expectation of is defined as

is defined as![{\displaystyle \operatorname {E} [X]=\sum _{i=1}^{k}x_{i}\,p_{i}=x_{1}p_{1}+x_{2}p_{2}+\cdots +x_{k}p_{k}.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/519542ccdb827d224e730020a1f0c0ce675297d3)

add up to 1 (

add up to 1 ( ), the expected value is theweighted average, with

), the expected value is theweighted average, with ’s being the weights.

’s being the weights. areequiprobable(that is,

areequiprobable(that is, ), then the weighted average turns into the simpleaverage. This is intuitive: the expected value of a random variable is the average of all values it can take; thus the expected value is what one expects to happen on average. If the outcomes

), then the weighted average turns into the simpleaverage. This is intuitive: the expected value of a random variable is the average of all values it can take; thus the expected value is what one expects to happen on average. If the outcomes are not equiprobable, then the simple average must be replaced with the weighted average, which takes into account the fact that some outcomes are more likely than the others. The intuition however remains the same: the expected value of

are not equiprobable, then the simple average must be replaced with the weighted average, which takes into account the fact that some outcomes are more likely than the others. The intuition however remains the same: the expected value of is what one expects to happen on average.

is what one expects to happen on average.Examples

- If one rolls thedie

times and computes the average (arithmetic mean) of the results, then as

times and computes the average (arithmetic mean) of the results, then as grows, the average willalmost surelyconvergeto the expected value, a fact known as thestrong law of large numbers. One example sequence of ten rolls of thedieis 2, 3, 1, 2, 5, 6, 2, 2, 2, 6, which has the average of 3.1, with the distance of 0.4 from the expected value of 3.5. The convergence is relatively slow: the probability that the average falls within the range3.5 ± 0.1is 21.6% for ten rolls, 46.1% for a hundred rolls and 93.7% for a thousand rolls. See the figure for an illustration of the averages of longer sequences of rolls of thedieand how they converge to the expected value of 3.5. More generally, the rate of convergence can be roughly quantified by e.g.Chebyshev's inequalityand theBerry–Esseen theorem.

grows, the average willalmost surelyconvergeto the expected value, a fact known as thestrong law of large numbers. One example sequence of ten rolls of thedieis 2, 3, 1, 2, 5, 6, 2, 2, 2, 6, which has the average of 3.1, with the distance of 0.4 from the expected value of 3.5. The convergence is relatively slow: the probability that the average falls within the range3.5 ± 0.1is 21.6% for ten rolls, 46.1% for a hundred rolls and 93.7% for a thousand rolls. See the figure for an illustration of the averages of longer sequences of rolls of thedieand how they converge to the expected value of 3.5. More generally, the rate of convergence can be roughly quantified by e.g.Chebyshev's inequalityand theBerry–Esseen theorem.The roulette game consists of a small ball and a wheel with 38 numbered pockets around the edge. As the wheel is spun, the ball bounces around randomly until it settles down in one of the pockets. Suppose random variable represents the (monetary) outcome of a $1 bet on a single number ("straight up" bet). If the bet wins (which happens with probability 1/38 in American roulette), the payoff is $35; otherwise the player loses the bet. The expected profit from such a bet will be

- That is, the bet of $1 stands to lose $0.0526, so its expected value is -$0.0526.

Countably infinite case

be a random variable with a countable set of outcomes

be a random variable with a countable set of outcomes occurring with probabilities

occurring with probabilities respectively, such that theinfinite sum

respectively, such that theinfinite sum converges. The expected value of

converges. The expected value of is defined as the series

is defined as the series![{\displaystyle \operatorname {E} [X]=\sum _{i=1}^{\infty }x_{i}\,p_{i}.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/2509d047fc89077d41febe60520c076d55386608)

![{\displaystyle \textstyle {\Bigl |}\operatorname {E} [X]{\Bigr |}\leq \sum _{i=1}^{\infty }|x_{i}|\,p_{i}<\infty .}](https://wikimedia.org/api/rest_v1/media/math/render/svg/001fc3dd828292a1c09491ff627029caa5e4e6a3)

Remark 2. Due to absolute convergence, the expected value does not depend on the order in which the outcomes are presented. By contrast, a conditionally convergent series can be made to converge or diverge arbitrarily, via the Riemann rearrangement theorem.

Example

Suppose and for , where (with being the natural logarithm) is the scale factor such that the probabilities sum to 1. Then

is

is .

.For an example that is not absolutely convergent, suppose random variable takes values 1, −2, 3, −4, ..., with respective probabilities , ..., where is a normalizing constant that ensures the probabilities sum up to one. Then the infinite sum

. However it would be incorrect to claim that the expected value of

. However it would be incorrect to claim that the expected value of is equal to this number—in fact

is equal to this number—in fact![\operatorname {E} [X]](https://wikimedia.org/api/rest_v1/media/math/render/svg/44dd294aa33c0865f58e2b1bdaf44ebe911dbf93) does not exist (finite or infinite), as this series does not converge absolutely (seeAlternating harmonic series).

does not exist (finite or infinite), as this series does not converge absolutely (seeAlternating harmonic series).An example that diverges arises in the context of the St. Petersburg paradox. Let and for . The expected value calculation gives

Absolutely continuous case

is a random variable whosecumulative distribution functionadmits adensity

is a random variable whosecumulative distribution functionadmits adensity , then the expected value is defined as the following Lebesgue integral:

, then the expected value is defined as the following Lebesgue integral:![{\displaystyle \operatorname {E} [X]=\int _{\mathbb {R} }xf(x)\,dx.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/2dabe1557bd0386dc158ef46669f9b8123af5f7a)

![\operatorname {E} [X]](https://wikimedia.org/api/rest_v1/media/math/render/svg/44dd294aa33c0865f58e2b1bdaf44ebe911dbf93) may often be treated as animproper Riemann integral

may often be treated as animproper Riemann integral Specifically, if the function

Specifically, if the function is Riemann-integrable on every finite interval

is Riemann-integrable on every finite interval![[a,b]](https://wikimedia.org/api/rest_v1/media/math/render/svg/9c4b788fc5c637e26ee98b45f89a5c08c85f7935) , and

, and

then the values (whether finite or infinite) of both integrals agree.

General case

is arandom variabledefined on aprobability space

is arandom variabledefined on aprobability space , then the expected value of

, then the expected value of , denoted by

, denoted by![\operatorname {E} [X]](https://wikimedia.org/api/rest_v1/media/math/render/svg/44dd294aa33c0865f58e2b1bdaf44ebe911dbf93) ,

, , or

, or , is defined as theLebesgue integral

, is defined as theLebesgue integral![{\displaystyle \operatorname {E} [X]=\int _{\Omega }X(\omega )\,d\operatorname {P} (\omega ).}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f2c4265bd78bfc615c6da1f1fae310d462793187)

and

and , then

, then The functions

The functions and

and can be shown to be measurable (hence, random variables), and, by definition of Lebesgue integral,

can be shown to be measurable (hence, random variables), and, by definition of Lebesgue integral,![{\displaystyle {\begin{aligned}\operatorname {E} [X]&=\int _{\Omega }X(\omega )\,d\operatorname {P} (\omega )\\&=\int _{\Omega }X_{+}(\omega )\,d\operatorname {P} (\omega )-\int _{\Omega }X_{-}(\omega )\,d\operatorname {P} (\omega )\\&=\operatorname {E} [X_{+}]-\operatorname {E} [X_{-}],\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/090ae19b8561434379cef8101ec30f37d4bbe416)

![{\displaystyle \operatorname {E} [X_{+}]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/8f2b3e32ea53f1d14dd33731c141a13a54a7da6e) and

and![{\displaystyle \operatorname {E} [X_{-}]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/e2248b456069f2845c8433ec1930911c10a2009c) are non-negative and possibly infinite.

are non-negative and possibly infinite.The following scenarios are possible:

is finite, i.e.

is infinite, i.e. and

is neither finite nor infinite, i.e.

is thecumulative distribution functionof

is thecumulative distribution functionof , then

, then![{\displaystyle \operatorname {E} [X]=\int _{-\infty }^{+\infty }x\,dF_{X}(x),}](https://wikimedia.org/api/rest_v1/media/math/render/svg/b9a0b481181b4ca58a28d7743b42363f5066586b)

where the integral is interpreted in the sense of Lebesgue–Stieltjes.

Remark 3. An example of a distribution for which there is no expected value is Cauchy distribution.

Remark 4. For multidimensional random variables, their expected value is defined per component, i.e.

![{\displaystyle \operatorname {E} [(X_{1},\ldots ,X_{n})]=(\operatorname {E} [X_{1}],\ldots ,\operatorname {E} [X_{n}])}](https://wikimedia.org/api/rest_v1/media/math/render/svg/82529dea1fae623cf096f6e7955332fa73bf791a)

with elements

with elements ,

,![{\displaystyle (\operatorname {E} [X])_{ij}=\operatorname {E} [X_{ij}].}](https://wikimedia.org/api/rest_v1/media/math/render/svg/5581856278a0f539aaa981d81fce64e5a35fff7a)

Basic properties

The properties below replicate or follow immediately from those of Lebesgue integral.

is anevent, then

is anevent, then![{\displaystyle \operatorname {E} [{\mathbf {1} }_{A}]=\operatorname {P} (A),}](https://wikimedia.org/api/rest_v1/media/math/render/svg/0c80961edf7759c225cf8aa133345e4e4eff7d39) where

where is the indicator function of the set

is the indicator function of the set .

. ,

,![{\displaystyle \operatorname {E} [{\mathbf {1} }_{A}]=1\cdot \operatorname {P} (A)+0\cdot \operatorname {P} (\Omega \setminus A)=\operatorname {P} (A).}](https://wikimedia.org/api/rest_v1/media/math/render/svg/49c23205ce0226e3a5e807040eea3ef1663e8542)

If X = Y (a.s.) then E[X] = E[Y]

(a.s.),

(a.s.), (a.s.)), and that changing a simple random variable on a set of probability zero does not alter the expected value.

(a.s.)), and that changing a simple random variable on a set of probability zero does not alter the expected value.Expected value of a constant

is a random variable, and

is a random variable, and (a.s.), where

(a.s.), where![{\displaystyle c\in [-\infty ,+\infty ]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/efc16e7f0da8125427c46522d4e0fa5449dc7131) , then

, then![{\displaystyle \operatorname {E} [X]=c}](https://wikimedia.org/api/rest_v1/media/math/render/svg/8c081385ba053a066911729481c89ad435cc8c6a) . In particular, for an arbitrary random variable

. In particular, for an arbitrary random variable ,

,![{\displaystyle \operatorname {E} [\operatorname {E} [X]]=\operatorname {E} [X]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/7ff311903fa69e69841abfef5c018d9c43145dac) .

.Linearity

![\operatorname {E}[\cdot ]](https://wikimedia.org/api/rest_v1/media/math/render/svg/0a71518eb57ffaf54c0c31bf94de5ac9d7ab11a1) islinearin the sense that

islinearin the sense that![{\displaystyle {\begin{aligned}\operatorname {E} [X+Y]&=\operatorname {E} [X]+\operatorname {E} [Y],\\[6pt]\operatorname {E} [aX]&=a\operatorname {E} [X],\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/c62c4be0f6e8ee186fb460338996729aaa9ae85d)

and

and are arbitrary random variables, and

are arbitrary random variables, and is a constant.

is a constant. and

and be random variables whose expected values are defined (different from

be random variables whose expected values are defined (different from ).

).If is also defined (i.e. differs from ), then

![{\displaystyle \operatorname {E} [X+Y]=\operatorname {E} [X]+\operatorname {E} [Y].}](https://wikimedia.org/api/rest_v1/media/math/render/svg/a6a3cada77936a04afda84615e3a2d88cf9461cc)

Let be finite, and be a finite scalar. Then

E[X] exists and is finite if and only if E[|X|] is finite

are equivalent:

are equivalent:exists and is finite.

Both and are finite.

is finite.

. By linearity,

. By linearity,![{\displaystyle \operatorname {E} [|X|]=\operatorname {E} [X_{+}]+\operatorname {E} [X_{-}]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/6e02e47bffea1cfdac34780a6006c415bc422c5a) . The above equivalency relies on the definition of Lebesgue integral and measurability of

. The above equivalency relies on the definition of Lebesgue integral and measurability of .

. is integrable" and "the expected value of

is integrable" and "the expected value of is finite" are used interchangeably when speaking of a random variable throughout this article.

is finite" are used interchangeably when speaking of a random variable throughout this article.If X ≥ 0 (a.s.) then E[X] ≥ 0

Monotonicity

(a.s.), and both

(a.s.), and both![\operatorname {E} [X]](https://wikimedia.org/api/rest_v1/media/math/render/svg/44dd294aa33c0865f58e2b1bdaf44ebe911dbf93) and

and![{\displaystyle \operatorname {E} [Y]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/639e8577c6faffc0471c7e123ead30970034e6d5) exist, then

exist, then![{\displaystyle \operatorname {E} [X]\leq \operatorname {E} [Y]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/bcc409f2b956425dc9dacce39207930f60057d55) .

.![\operatorname {E} [X]](https://wikimedia.org/api/rest_v1/media/math/render/svg/44dd294aa33c0865f58e2b1bdaf44ebe911dbf93) and

and![{\displaystyle \operatorname {E} [Y]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/639e8577c6faffc0471c7e123ead30970034e6d5) exist in the sense that

exist in the sense that![{\displaystyle \min(\operatorname {E} [X_{+}],\operatorname {E} [X_{-}])<\infty }](https://wikimedia.org/api/rest_v1/media/math/render/svg/af9afd1015b1795b9d746e902eadae41120fb080) and

and![{\displaystyle \min(\operatorname {E} [Y_{+}],\operatorname {E} [Y_{-}])<\infty .}](https://wikimedia.org/api/rest_v1/media/math/render/svg/ec0cee26e0baadf79157ffec4c25939070cb515d)

, since

, since (a.s.).

(a.s.).If (a.s.) and is finite then so is

and

and be random variables such that

be random variables such that (a.s.) and

(a.s.) and![{\displaystyle \operatorname {E} [Y]<\infty }](https://wikimedia.org/api/rest_v1/media/math/render/svg/1d673ec21dbeafe0aa85b387902be8f1e99c71ab) . Then

. Then![{\displaystyle \operatorname {E} [X]\neq \pm \infty }](https://wikimedia.org/api/rest_v1/media/math/render/svg/69e181fc39fcbefa2553017eac18cdac2842d242) .

. ,

, exists, finite or infinite. By monotonicity,

exists, finite or infinite. By monotonicity,![{\displaystyle \operatorname {E} |X|\leq \operatorname {E} [Y]<\infty }](https://wikimedia.org/api/rest_v1/media/math/render/svg/76bf5ca01b19d23933c539e345beae216814a896) , so

, so is finite which, as we saw earlier, is equivalent to

is finite which, as we saw earlier, is equivalent to![\operatorname {E} [X]](https://wikimedia.org/api/rest_v1/media/math/render/svg/44dd294aa33c0865f58e2b1bdaf44ebe911dbf93) being finite.

being finite.If and then

![\operatorname {E} [X]](https://wikimedia.org/api/rest_v1/media/math/render/svg/44dd294aa33c0865f58e2b1bdaf44ebe911dbf93) later on.

later on. is a random variable, then so is

is a random variable, then so is , for every

, for every . If, in addition,

. If, in addition, and

and , then

, then .

.Counterexample for infinite measure

is essential. By way of counterexample, consider the measurable space

is essential. By way of counterexample, consider the measurable space

is the Borel

is the Borel -algebra on the interval

-algebra on the interval and

and is the linear Lebesgue measure. The reader can prove that

is the linear Lebesgue measure. The reader can prove that even though

even though (Sketch of proof:

(Sketch of proof: and

and define a measure

define a measure on

on![{\displaystyle \textstyle [1,+\infty )=\cup _{n=1}^{\infty }[1,n].}](https://wikimedia.org/api/rest_v1/media/math/render/svg/1831e8f7184707c2f671a6f4f118e90ea25322f2) Use "continuity from below" w.r. to

Use "continuity from below" w.r. to and reduce to Riemann integral on each finite subinterval

and reduce to Riemann integral on each finite subinterval![[1,n]](https://wikimedia.org/api/rest_v1/media/math/render/svg/7c79af450e22e8fd23f28e6be4cb23a47b24c1ba) ).

).Extremal property

is a random variable, then so is

is a random variable, then so is .

.![{\displaystyle \operatorname {E} [X])}](https://wikimedia.org/api/rest_v1/media/math/render/svg/42112e92350371016dafb7098fd45fd0e8448e17) ).** Let

).** Let be a random variable, and

be a random variable, and![{\displaystyle \operatorname {E} [X^{2}]<\infty }](https://wikimedia.org/api/rest_v1/media/math/render/svg/6c5ffb814ff31a1fdc2b6f3899412ac4d1bf1971) . Then

. Then![{\displaystyle \operatorname {E} [X]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/44dd294aa33c0865f58e2b1bdaf44ebe911dbf93) and

and![{\displaystyle \operatorname {Var} [X]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/b79297a808478243e9aab0b27dd1ab583c0f877d) are finite, and

are finite, and![{\displaystyle \operatorname {E} [X]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/44dd294aa33c0865f58e2b1bdaf44ebe911dbf93) is the best least squares approximation for

is the best least squares approximation for among constants. Specifically,

among constants. Specifically,for every ,

equality holds if and only if

![{\displaystyle \operatorname {Var} [X]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/b79297a808478243e9aab0b27dd1ab583c0f877d) denotes thevarianceof

denotes thevarianceof ).

). , then

, then![\operatorname {E} [X]](https://wikimedia.org/api/rest_v1/media/math/render/svg/44dd294aa33c0865f58e2b1bdaf44ebe911dbf93) , in some practically useful sense, is one's best bet if no advance information about the outcome is available. If, on the other hand, one does have some advance knowledge

, in some practically useful sense, is one's best bet if no advance information about the outcome is available. If, on the other hand, one does have some advance knowledge regarding the outcome, then — again, in some practically useful sense — one's bet may be improved upon by usingconditional expectations

regarding the outcome, then — again, in some practically useful sense — one's bet may be improved upon by usingconditional expectations![{\displaystyle \operatorname {E} [X\mid {\mathcal {F}}]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/70a2249e7a329012144282a1cb87a39f44e455ba) (of which

(of which![\operatorname {E} [X]](https://wikimedia.org/api/rest_v1/media/math/render/svg/44dd294aa33c0865f58e2b1bdaf44ebe911dbf93) is a special case) rather than

is a special case) rather than![\operatorname {E} [X]](https://wikimedia.org/api/rest_v1/media/math/render/svg/44dd294aa33c0865f58e2b1bdaf44ebe911dbf93) .

.![\operatorname {E} [X]](https://wikimedia.org/api/rest_v1/media/math/render/svg/44dd294aa33c0865f58e2b1bdaf44ebe911dbf93) and

and![{\displaystyle \operatorname {Var} [X]=\operatorname {E} [X^{2}]-\operatorname {E} ^{2}[X]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/e35910215b95aca69517a394fcebf1f81fa78593) are finite, and

are finite, and![{\displaystyle {\begin{aligned}\operatorname {E} [X-c]^{2}&=\operatorname {E} [X^{2}-2cX+c^{2}]\\[6pt]&=\operatorname {E} [X^{2}]-2c\operatorname {E} [X]+c^{2}\\[6pt]&=(c-\operatorname {E} [X])^{2}+\operatorname {E} [X^{2}]-\operatorname {E} ^{2}[X]\\[6pt]&=(c-\operatorname {E} [X])^{2}+\operatorname {Var} [X],\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/9e7af9cc5a350786119f208ee5f0921bcdf1756d)

whence the extremal property follows.

Non-degeneracy

, then

, then (a.s.).

(a.s.).If then (a.s.)

Corollary: if then (a.s.)

Corollary: if then (a.s.)

,

,![{\displaystyle |\operatorname {E} [X]|\leq \operatorname {E} |X|}](https://wikimedia.org/api/rest_v1/media/math/render/svg/d950496113ee61bc1f496eecbadcf6bcc85e8d62) .

.Proof. By definition of Lebesgue integral,

![{\displaystyle {\begin{aligned}|\operatorname {E} [X]|&={\Bigl |}\operatorname {E} [X_{+}]-\operatorname {E} [X_{-}]{\Bigr |}\leq {\Bigl |}\operatorname {E} [X_{+}]{\Bigr |}+{\Bigl |}\operatorname {E} [X_{-}]{\Bigr |}\\[5pt]&=\operatorname {E} [X_{+}]+\operatorname {E} [X_{-}]=\operatorname {E} [X_{+}+X_{-}]\\[5pt]&=\operatorname {E} |X|.\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/db2e8a0c2abdfcae43b6c4375d74fd3134b5aece)

This result can also be proved based on Jensen's inequality.

Non-multiplicativity

![{\displaystyle \operatorname {E} [XY]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/612af0bbf256874e0b0551305574be507f9ff805) is not necessarily equal to

is not necessarily equal to![{\displaystyle \operatorname {E} [X]\cdot \operatorname {E} [Y]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/c52e5f76c5aad37aeeaf32d355681263e92aad24) . Indeed, let

. Indeed, let assume the values of 1 and -1 with probability 0.5 each. Then

assume the values of 1 and -1 with probability 0.5 each. Then![{\displaystyle \operatorname {E^{2}} [X]=\left({\frac {1}{2}}\cdot (-1)+{\frac {1}{2}}\cdot 1\right)^{2}=0,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/a7011b31ea49e21300680cf711cf22de985cdb29)

and

![{\displaystyle \operatorname {E} [X^{2}]={\frac {1}{2}}\cdot (-1)^{2}+{\frac {1}{2}}\cdot 1^{2}=1,{\text{ so }}\operatorname {E} [X^{2}]\neq \operatorname {E^{2}} [X].}](https://wikimedia.org/api/rest_v1/media/math/render/svg/e70a760d1e239b6ae56aaecb4ae64fbc1d9ba597)

The amount by which the multiplicativity fails is called the covariance:

![\operatorname {Cov} (X,Y)=\operatorname {E} [XY]-\operatorname {E} [X]\operatorname {E} [Y].](https://wikimedia.org/api/rest_v1/media/math/render/svg/f5e6ff22acd2353e95a647f4ef5adb997748df14)

and

and areindependent, then

areindependent, then![{\displaystyle \operatorname {E} [XY]=\operatorname {E} [X]\operatorname {E} [Y]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/5cfc97e307911d3230962dd68be6a5c3dcaed71a) , and

, and .

.Counterexample: despite pointwise

![{\displaystyle \left([0,1],{\mathcal {B}}_{[0,1]},{\mathrm {P} }\right)}](https://wikimedia.org/api/rest_v1/media/math/render/svg/d54b1bbde84a4583352df8267960eb905576d691) be the probability space, where

be the probability space, where![{\displaystyle {\mathcal {B}}_{[0,1]}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/94e71775a63f50c58d04fbf173f153a91eb800d3) is the Borel

is the Borel -algebra on

-algebra on![[0,1]](https://wikimedia.org/api/rest_v1/media/math/render/svg/738f7d23bb2d9642bab520020873cccbef49768d) and

and the linear Lebesgue measure. For

the linear Lebesgue measure. For define a sequence of random variables

define a sequence of random variables![{\displaystyle X_{i}=i\cdot {\mathbf {1} }_{\left[0,{\frac {1}{i}}\right]}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/6eaaf808e18df886be901ac5de19ff15effaca9d)

and a random variable

![[0,1]](https://wikimedia.org/api/rest_v1/media/math/render/svg/738f7d23bb2d9642bab520020873cccbef49768d) , with

, with being the indicator function of the set

being the indicator function of the set![{\displaystyle S\subseteq [0,1]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/c4baae402ffc86908db11cf04dd0f004e7d0907f) .

.![{\displaystyle x\in [0,1],}](https://wikimedia.org/api/rest_v1/media/math/render/svg/c68354ed86bae40d711eba3ef26c4ec740fcc8fc) as

as

and

and![{\displaystyle \operatorname {E} [X_{i}]=i\cdot {\mathrm {P} }\left(\left[0,{\frac {1}{i}}\right]\right)=i\cdot {\dfrac {1}{i}}=1,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/0a9e3c81a64f8e39881376d85ba685df594092ea)

![{\displaystyle \lim _{i\to \infty }\operatorname {E} [X_{i}]=1.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/a1bf738a32146d9578c23fe91b12a775127b729e) On the other hand,

On the other hand, and hence

and hence![{\displaystyle \operatorname {E} \left[X\right]=0.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/77924070fe90b2dc41450278ee789167be8b8416)

Countable non-additivity

-additive, i.e.

-additive, i.e.![{\displaystyle \operatorname {E} \left[\sum _{i=0}^{\infty }X_{i}\right]\neq \sum _{i=0}^{\infty }\operatorname {E} [X_{i}].}](https://wikimedia.org/api/rest_v1/media/math/render/svg/2ac6edd6c60294d31d7d7381f1da96929cae656a)

![{\displaystyle \left([0,1],{\mathcal {B}}_{[0,1]},{\mathrm {P} }\right)}](https://wikimedia.org/api/rest_v1/media/math/render/svg/d54b1bbde84a4583352df8267960eb905576d691) be the probability space, where

be the probability space, where![{\displaystyle {\mathcal {B}}_{[0,1]}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/94e71775a63f50c58d04fbf173f153a91eb800d3) is the Borel

is the Borel -algebra on

-algebra on![[0,1]](https://wikimedia.org/api/rest_v1/media/math/render/svg/738f7d23bb2d9642bab520020873cccbef49768d) and

and the linear Lebesgue measure. Define a sequence of random variables

the linear Lebesgue measure. Define a sequence of random variables![{\displaystyle \textstyle X_{i}=(i+1)\cdot {\mathbf {1} }_{\left[0,{\frac {1}{i+1}}\right]}-i\cdot {\mathbf {1} }_{\left[0,{\frac {1}{i}}\right]}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/a37886d012e2e959dab737e89bccd26dda11b126) on

on![[0,1]](https://wikimedia.org/api/rest_v1/media/math/render/svg/738f7d23bb2d9642bab520020873cccbef49768d) , with

, with being the indicator function of the set

being the indicator function of the set![{\displaystyle S\subseteq [0,1]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/c4baae402ffc86908db11cf04dd0f004e7d0907f) . For the pointwise sums, we have

. For the pointwise sums, we have![{\displaystyle \sum _{i=0}^{n}X_{i}=(n+1)\cdot {\mathbf {1} }_{\left[0,{\frac {1}{n+1}}\right]},}](https://wikimedia.org/api/rest_v1/media/math/render/svg/8ab89bc6b39307e2866eed4d9f32559f11f3b907)

By finite additivity,

![{\displaystyle \sum _{i=0}^{\infty }\operatorname {E} [X_{i}]=\lim _{n\to \infty }\sum _{i=0}^{n}\operatorname {E} [X_{i}]=\lim _{n\to \infty }\operatorname {E} \left[\sum _{i=0}^{n}X_{i}\right]=1.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/ff72f1612ba41df7e5715de86a790b9e94c55d5b)

and hence

and hence![{\displaystyle \operatorname {E} \left[\sum _{i=0}^{\infty }X_{i}\right]=0\neq 1=\sum _{i=0}^{\infty }\operatorname {E} [X_{i}].}](https://wikimedia.org/api/rest_v1/media/math/render/svg/c2ccc2fa3e68160601e7d25b4ea9ae364abbe999)

Countable additivity for non-negative random variables

be non-negative random variables. It follows frommonotone convergence theoremthat

be non-negative random variables. It follows frommonotone convergence theoremthat![{\displaystyle \operatorname {E} \left[\sum _{i=0}^{\infty }X_{i}\right]=\sum _{i=0}^{\infty }\operatorname {E} [X_{i}].}](https://wikimedia.org/api/rest_v1/media/math/render/svg/aaf71dd77b7a0d0e91daeb404051d791320a19f2)

Inequalities

Cauchy–Bunyakovsky–Schwarz inequality

The Cauchy–Bunyakovsky–Schwarz inequality states that

![{\displaystyle (\operatorname {E} [XY])^{2}\leq \operatorname {E} [X^{2}]\cdot \operatorname {E} [Y^{2}].}](https://wikimedia.org/api/rest_v1/media/math/render/svg/e270eda0d23ede2b9693a7d0b0d29d014b52bdc0)

Markov's inequality

and

and , Markov's inequality states that

, Markov's inequality states that![{\displaystyle \operatorname {P} (X\geq a)\leq {\frac {\operatorname {E} [X]}{a}}.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/d33c3c6fa0ecb7b99a4245dc1f55668bc50fd8cc)

Bienaymé-Chebyshev inequality

be an arbitrary random variable with finite expected value

be an arbitrary random variable with finite expected value![\operatorname {E} [X]](https://wikimedia.org/api/rest_v1/media/math/render/svg/44dd294aa33c0865f58e2b1bdaf44ebe911dbf93) and finitevariance

and finitevariance![{\displaystyle \operatorname {Var} [X]\neq 0}](https://wikimedia.org/api/rest_v1/media/math/render/svg/8daf591feb95c7381b749d79ad0a8efb40205e53) . The Bienaymé-Chebyshev inequality states that, for any real number

. The Bienaymé-Chebyshev inequality states that, for any real number ,

,![{\displaystyle \operatorname {P} {\Bigl (}{\Bigl |}X-\operatorname {E} [X]{\Bigr |}\geq k{\sqrt {\operatorname {Var} [X]}}{\Bigr )}\leq {\frac {1}{k^{2}}}.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/1eae646393be5630426222c88d9594be5f140d5a)

Jensen's inequality

be aBorelconvex functionand

be aBorelconvex functionand a random variable such that

a random variable such that . Jensen's inequality states that

. Jensen's inequality states that

is well-defined even if

is well-defined even if is allowed to assume infinite values. Indeed,

is allowed to assume infinite values. Indeed, implies that

implies that (a.s.), so the random variable

(a.s.), so the random variable is defined almost sure, and therefore there is enough information to compute

is defined almost sure, and therefore there is enough information to compute

![{\displaystyle |\operatorname {E} [X]|\leq \operatorname {E} |X|}](https://wikimedia.org/api/rest_v1/media/math/render/svg/d950496113ee61bc1f496eecbadcf6bcc85e8d62) since the absolute value function is convex.

since the absolute value function is convex.Lyapunov's inequality

. Lyapunov's inequality states that

. Lyapunov's inequality states that

and

and , obtain

, obtain . Taking the

. Taking the th

root of each side completes the proof.

th

root of each side completes the proof.Corollary.

Hölder's inequality

and

and satisfy

satisfy ,

, , and

, and . The Hölder's inequality states that

. The Hölder's inequality states that

Minkowski inequality

be an integer satisfying

be an integer satisfying . Let, in addition,

. Let, in addition, and

and . Then, according to the Minkowski inequality,

. Then, according to the Minkowski inequality, and

and

Taking limits under the sign

Monotone convergence theorem

and the random variables

and the random variables and

and be defined on the same probability space

be defined on the same probability space Suppose that

Suppose thatall the expected values and are defined (differ from );

for every

is the pointwise limit of (a.s.), i.e. (a.s.).

The monotone convergence theorem states that

![{\displaystyle \lim _{n}\operatorname {E} [X_{n}]=\operatorname {E} [X].}](https://wikimedia.org/api/rest_v1/media/math/render/svg/2b73765da42e85ed02b14edbdb043d4439a7d811)

Fatou's lemma

and the random variable

and the random variable be defined on the same probability space

be defined on the same probability space Suppose that

Suppose thatall the expected values and are defined (differ from );

(a.s.), for every

Fatou's lemma states that

![{\displaystyle \operatorname {E} [\liminf _{n}X_{n}]\leq \liminf _{n}\operatorname {E} [X_{n}].}](https://wikimedia.org/api/rest_v1/media/math/render/svg/057772ee68f6360861362f952828d9777135c25f)

is a random variable, for every

is a random variable, for every by the properties of limit inferior).

by the properties of limit inferior).Corollary. Let

pointwise (a.s.);

for some constant (independent from );

(a.s.), for every

![{\displaystyle \operatorname {E} [X]\leq C.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/41f7fe3f4be094774d092c875c87774a60101495)

(a.s.) and applying Fatou's lemma.

(a.s.) and applying Fatou's lemma.Dominated convergence theorem

be a sequence of random variables. If

be a sequence of random variables. If pointwise(a.s.),

pointwise(a.s.), (a.s.), and

(a.s.), and![{\displaystyle \operatorname {E} [Y]<\infty }](https://wikimedia.org/api/rest_v1/media/math/render/svg/1d673ec21dbeafe0aa85b387902be8f1e99c71ab) . Then, according to the dominated convergence theorem,

. Then, according to the dominated convergence theorem,the function is measurable (hence a random variable);

;

all the expected values and are defined (do not have the form );

(both sides may be infinite);

Uniform integrability

![{\displaystyle \displaystyle \lim _{n}\operatorname {E} [X_{n}]=\operatorname {E} [\lim _{n}X_{n}]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/49b910be9482f360448e16c89ac52046dab69f9d) holds when the sequence

holds when the sequence is uniformly integrable.

is uniformly integrable.Relationship with characteristic function

of a scalar random variable

of a scalar random variable is related to itscharacteristic function

is related to itscharacteristic function by the inversion formula:

by the inversion formula:

(where

(where is a Borel function), we can use this inversion formula to obtain

is a Borel function), we can use this inversion formula to obtain![{\displaystyle \operatorname {E} [g(X)]={\frac {1}{2\pi }}\int _{\mathbb {R} }g(x)\left[\int _{\mathbb {R} }e^{-itx}\varphi _{X}(t)\,dt\right]\,dx.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/135fbb1b442ae5cf9d76032b94b1a996367b8cd8)

![{\displaystyle \operatorname {E} [g(X)]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/eb4b4bbeb1430cfba120570df9f18fb09480a7f3) is finite, changing the order of integration, we get, in accordance withFubini–Tonelli theorem,

is finite, changing the order of integration, we get, in accordance withFubini–Tonelli theorem,![{\displaystyle \operatorname {E} [g(X)]={\frac {1}{2\pi }}\int _{\mathbb {R} }G(t)\varphi _{X}(t)\,dt,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f0d10e08cda1c47d94fc86ba0d45fd4ca51e6670)

where

The expression for

The expression for![{\displaystyle \operatorname {E} [g(X)]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/eb4b4bbeb1430cfba120570df9f18fb09480a7f3) also follows directly fromPlancherel theorem.

also follows directly fromPlancherel theorem.Uses and applications

The mass of probability distribution is balanced at the expected value, here a Beta(α,β) distribution with expected value α/(α+β).

It is possible to construct an expected value equal to the probability of an event by taking the expectation of an indicator function that is one if the event has occurred and zero otherwise. This relationship can be used to translate properties of expected values into properties of probabilities, e.g. using the law of large numbers to justify estimating probabilities by frequencies.

The expected values of the powers of X are called the moments of X; the moments about the mean of X are expected values of powers of X − E[X]. The moments of some random variables can be used to specify their distributions, via their moment generating functions.

To empirically estimate the expected value of a random variable, one repeatedly measures observations of the variable and computes the arithmetic mean of the results. If the expected value exists, this procedure estimates the true expected value in an unbiased manner and has the property of minimizing the sum of the squares of the residuals (the sum of the squared differences between the observations and the estimate). The law of large numbers demonstrates (under fairly mild conditions) that, as the size of the sample gets larger, the variance of this estimate gets smaller.

![{\displaystyle \operatorname {P} ({X\in {\mathcal {A}}})=\operatorname {E} [{\mathbf {1} }_{\mathcal {A}}]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/bc2d627e0e24ccb93ccedb966935f49319f4fd25) , where

, where is the indicator function of the set

is the indicator function of the set .

.In classical mechanics, the center of mass is an analogous concept to expectation. For example, suppose X is a discrete random variable with values xi and corresponding probabilities pi. Now consider a weightless rod on which are placed weights, at locations xi along the rod and having masses pi (whose sum is one). The point at which the rod balances is E[X].

Expected values can also be used to compute the variance, by means of the computational formula for the variance

![\operatorname {Var} (X)=\operatorname {E} [X^{2}]-(\operatorname {E} [X])^{2}.](https://wikimedia.org/api/rest_v1/media/math/render/svg/3704ee667091917e2e34f5b6e28e8d49df4b9650)

operating on aquantum statevector

operating on aquantum statevector is written as

is written as . Theuncertaintyin

. Theuncertaintyin can be calculated using the formula

can be calculated using the formula .

.The law of the unconscious statistician

,

, , given that

, given that has a probability density function

has a probability density function , is given by theinner productof

, is given by theinner productof and

and :

:![{\displaystyle \operatorname {E} [g(X)]=\int _{\mathbb {R} }g(x)f(x)\,dx.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/a417b7efdd5329bcd40b2efd4b8ed5bd3b031e52)

Alternative formula for expected value

Formula for non-negative random variables

Finite and countably infinite case

![{\displaystyle \operatorname {E} [X]=\sum _{i=1}^{\infty }\operatorname {P} (X\geq i).}](https://wikimedia.org/api/rest_v1/media/math/render/svg/85715f502fef4a655370b691ceab776b06467dbe)

General case

![{\displaystyle X:\Omega \to [0,+\infty ]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/97556c9d6c579157a7450296d0d8874307cc6c56) is a non-negative random variable, then

is a non-negative random variable, then![{\displaystyle \operatorname {E} [X]=\int \limits _{[0,+\infty )}\operatorname {P} (X\geq x)\,dx=\int \limits _{[0,+\infty )}\operatorname {P} (X>x)\,dx,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/5bb7778f202e6c6173104df23c5b28061ccaa166)

and

![{\displaystyle \operatorname {E} [X]={\hbox{(R)}}\int \limits _{0}^{\infty }\operatorname {P} (X\geq x)\,dx={\hbox{(R)}}\int \limits _{0}^{\infty }\operatorname {P} (X>x)\,dx,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/34acca8e31b325f80c5d12e58da98a0732e792a5)

denotesimproper Riemann integral.

denotesimproper Riemann integral.Formula for non-positive random variables

![{\displaystyle X:\Omega \to [-\infty ,0]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/271f630683820b6e58bbaed1607a4625bf0e9218) is a non-positive random variable, then

is a non-positive random variable, then![{\displaystyle \operatorname {E} [X]=-\int \limits _{(-\infty ,0]}\operatorname {P} (X\leq x)\,dx=-\int \limits _{(-\infty ,0]}\operatorname {P} (X<x)\,dx,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/8274a2ff34778ca40961ad94ff1110325034d4a0)

and

![{\displaystyle \operatorname {E} [X]=-{\hbox{(R)}}\int \limits _{-\infty }^{0}\operatorname {P} (X\leq x)\,dx=-{\hbox{(R)}}\int \limits _{-\infty }^{0}\operatorname {P} (X<x)\,dx,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f0a3a9a53dde8716f65759be51975948cc8fff1f)

denotesimproper Riemann integral.

denotesimproper Riemann integral.

is integer-valued, i.e.

is integer-valued, i.e. , then

, then![{\displaystyle \operatorname {E} [X]=-\sum _{i=-1}^{-\infty }\operatorname {P} (X\leq i).}](https://wikimedia.org/api/rest_v1/media/math/render/svg/beea16bfc472a05d213096689bf5fc4ebfae0613)

General case

can be both positive and negative, then

can be both positive and negative, then![{\displaystyle \operatorname {E} [X]=\operatorname {E} [X_{+}]-\operatorname {E} [X_{-}]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/91c3ebdf9ae9d089c6f85b62c34d0e3325ceefb1) ,

and the above results may be applied to

,

and the above results may be applied to and

and separately.

separately.History

The idea of the expected value originated in the middle of the 17th century from the study of the so-called problem of points, which seeks to divide the stakes in a fair way between two players who have to end their game before it's properly finished. This problem had been debated for centuries, and many conflicting proposals and solutions had been suggested over the years, when it was posed in 1654 to Blaise Pascal by French writer and amateur mathematician Chevalier de Méré. Méré claimed that this problem couldn't be solved and that it showed just how flawed mathematics was when it came to its application to the real world. Pascal, being a mathematician, was provoked and determined to solve the problem once and for all. He began to discuss the problem in a now famous series of letters to Pierre de Fermat. Soon enough they both independently came up with a solution. They solved the problem in different computational ways but their results were identical because their computations were based on the same fundamental principle. The principle is that the value of a future gain should be directly proportional to the chance of getting it. This principle seemed to have come naturally to both of them. They were very pleased by the fact that they had found essentially the same solution and this in turn made them absolutely convinced they had solved the problem conclusively. However, they did not publish their findings. They only informed a small circle of mutual scientific friends in Paris about it.[7]

Three years later, in 1657, a Dutch mathematician Christiaan Huygens, who had just visited Paris, published a treatise (see Huygens (1657)) "De ratiociniis in ludo aleæ" on probability theory. In this book he considered the problem of points and presented a solution based on the same principle as the solutions of Pascal and Fermat. Huygens also extended the concept of expectation by adding rules for how to calculate expectations in more complicated situations than the original problem (e.g., for three or more players). In this sense this book can be seen as the first successful attempt at laying down the foundations of the theory of probability.

In the foreword to his book, Huygens wrote: "It should be said, also, that for some time some of the best mathematicians of France have occupied themselves with this kind of calculus so that no one should attribute to me the honour of the first invention. This does not belong to me. But these savants, although they put each other to the test by proposing to each other many questions difficult to solve, have hidden their methods. I have had therefore to examine and go deeply for myself into this matter by beginning with the elements, and it is impossible for me for this reason to affirm that I have even started from the same principle. But finally I have found that my answers in many cases do not differ from theirs." (cited by Edwards (2002)). Thus, Huygens learned about de Méré's Problem in 1655 during his visit to France; later on in 1656 from his correspondence with Carcavi he learned that his method was essentially the same as Pascal's; so that before his book went to press in 1657 he knew about Pascal's priority in this subject.

Neither Pascal nor Huygens used the term "expectation" in its modern sense. In particular, Huygens writes: "That my Chance or Expectation to win any thing is worth just such a Sum, as wou'd procure me in the same Chance and Expectation at a fair Lay. ... If I expect a or b, and have an equal Chance of gaining them, my Expectation is worth a+b/2." More than a hundred years later, in 1814, Pierre-Simon Laplace published his tract "Théorie analytique des probabilités", where the concept of expected value was defined explicitly:

… this advantage in the theory of chance is the product of the sum hoped for by the probability of obtaining it; it is the partial sum which ought to result when we do not wish to run the risks of the event in supposing that the division is made proportional to the probabilities. This division is the only equitable one when all strange circumstances are eliminated; because an equal degree of probability gives an equal right for the sum hoped for. We will call this advantage mathematical hope.

See also

Central tendency

Chebyshev's inequality (an inequality on location and scale parameters)

Conditional expectation

Expected value is also a key concept in economics, finance, and many other subjects

The general term expectation

Expectation value (quantum mechanics)

Law of total expectation –the expected value of the conditional expected value of X given Y is the same as the expected value of X.

Moment (mathematics)

Nonlinear expectation (a generalization of the expected value)

Wald's equation for calculating the expected value of a random number of random variables

![{\displaystyle \operatorname {E} [X]=1\cdot {\frac {1}{6}}+2\cdot {\frac {1}{6}}+3\cdot {\frac {1}{6}}+4\cdot {\frac {1}{6}}+5\cdot {\frac {1}{6}}+6\cdot {\frac {1}{6}}=3.5.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/d535e1c37fd63db36fd0878e39b43ea7fa513ea4)

![{\displaystyle \operatorname {E} [\,{\text{gain from }}\$1{\text{ bet}}\,]=-\$1\cdot {\frac {37}{38}}+\$35\cdot {\frac {1}{38}}=-\$0.0526.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/75facfca3ff379ae7349d090b0f9fa9c81429516)

![{\displaystyle \operatorname {E} [X]=1\left({\frac {k}{2}}\right)+2\left({\frac {k}{8}}\right)+3\left({\frac {k}{24}}\right)+\dots ={\frac {k}{2}}+{\frac {k}{4}}+{\frac {k}{8}}+\dots =k.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/16fe11eff07887c16acf73bb812281b266e20acd)